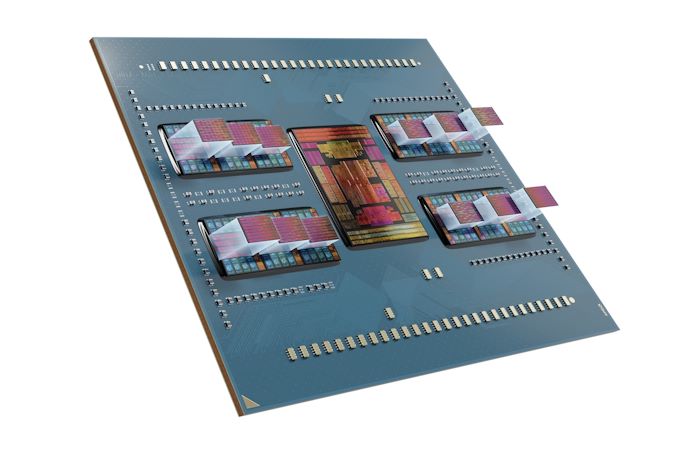

I don’t think that’s the issue. As said in the article, the researchers found the flaw by reading the architecture documentation. So the flaw is in the design of the API the operating system uses to configure the CPU and related resources. This API is public (though not open source) as to allow operating system vendors to do their job. It usually comes with examples and pseudo code on how some operations work. Here is an example (PDF).

Knowing how this feature is actually implemented in hardware (if the hardware was open source) would not have helped much. I would argue you are one level too low to properly understand the consequences of the implementation.

By the vague description in the article it actually looks like a meltdown or specter like issue where some code gets executed with the inappropriate privileges. Such issues are inherent to complex designs and no amount of open-source will save you there. We need a cultural and maybe a paradigm shift on how we design CPU to fully address those issues.

My guess would be to force a hierarchy as to distribute load. DNS is distributed in a sens. There are the root name servers that know about all TLD and then each TLD has its own server (in practice there is multiple TLD a single entity controls and it allocates as many server as needed to answer all DNS request for those). And those “TLD servers” know about the second level. And either they also know about the lower levels or those are further delegated.

So fewer TLD means that the “root” DNS servers do not have to keep a huge “phonebook” (TLD to IP address of the DNS responsible for them) and can therefore be efficient, which means that fewer of them are required. And fewer root server means its easier to update them and keep them consistent. And if nearly everyone can only register second level domains, then the root name servers do not need to be updated nearly as often.