The performance improvements claims are a bit shady as they compare the old FG technique which only creates one frame for every legit frame, with the next gen FG which can generate up to 3.

All Nvidia performance plots I’ve seen mention this at the bottom, making comparison very favorable to the 5000 series GPU supposedly.

Edit:

Thanks for the heads up.

I really don’t like that new Frame interpolation tech and think it’s almost only useful to marketers but not for actual gaming.

At least I wouldn’t touch it with any competitive game.

Hopefully we will get third party benchmarks soon without the bullshit perfs from Nvidia.

Yes, fuck all this frame generation and upscaling bs.

From personal experience, I’d say the end result for framegen is hit or miss. In some cases, you get a much smoother framerate without any noticeable downsides, and in others, your frame times are all over the place and it makes the game look choppy. For example, I couldn’t play CP2077 with franegen at all. I had more frames, but in reality it felt like I actually had fewer. With Ark Survival Ascended, I’m not seeing any downside and it basically doubled my framerate.

Upscaling, I’m generally sold on. If you try to upscale from 1080p to 4K, it’s usually pretty obvious, but you can render at 80% of the resolution and upscale the last 20% and get a pretty big framerate bump while getting better visuals than rendering at 100% with reduced settings.

That said, I would rather have better actual performance than just perceived performance.

Eh I’m pretty happy with the upscaling. I did several tests and upscaling won out for me personally as a happy middle ground to render Hunt Showdown at 4k vs running at 2k with great FPA and no upscaling or 4k with no upscaling but bad FPS.

Legitimate upscaling is fine, but this DLSS/FSR ML upscaling is dogshit and just introduces so many artifacts. It has become a crutch for developers, so they dont build their games properly anymore. Hardware is so strong and yet games perform worse than they did 10 years ago.

I mean this is FSR upscaling that I’m referring to. I did several comparisons and determined that it looked significantly better to upscaling using FSR from 2K -> 4k than it did to run at 2k.

Hunt has other ghosting issues but they’re related to CryEngine’s fake ray tracing technology (unrelated to the Nvidia/AMD ray tracing) and they happen without any upscaling applied.

Too bad FSR will be AMD hardware exclusive from here on out

I wouldn’t say fuck upscaling entirely, especially for 4k it can be useful on older cards. FSR made it possible to play Hitman on my 1070. But yeah, if I’m going for 4k I probably want very good graphics too, eg. in RDR2, and I don’t want any upscaling there. I’m so used to native 4k that I immediately spot if it’s anything else - even in Minecraft.

And frame generation is only useful in non-competetive games where you already have over 60 FPS, otherwise it will still be extremely sluggish, - in which case, it’s not realy useful anymore.

The point is, hardware is powerful enough for native 4K, but instead of that power being used properly, games are made quickly and then upscaling technology is slapped on at the end. DLSS has become a crutch and Nvidia are happy to keep pushing it and keeping a reason for you to buy their GPUs every generation, because otherwise we are at diminishing returns already.

It’s useful for use on older hardware, yes, I have no issue with that, I have issue with it being used on hardware that could otherwise easily run 4K 120FPS+ with standard rasterization and being marketed as a ‘must’.

On the site with the performance graphs, Farcry and Plague Tale should be more representative, if you want to ignore FG. That’s still only two games, with first-party benchmarks, so wait for third-party anyway.

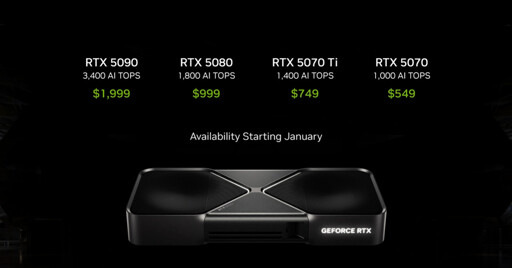

Maybe I’m stuck in the last decade, but these prices seem insane. I know we’ve yet to see what a 5050 (lol) or 5060 would be capable of or its price point. However launching at $549 as your lowest card feels like a significant amount of the consumer base won’t be able to buy any of these.

Sadly I think this is the new normal. You could buy a decent GPU, or you could buy an entire game console. Unless you have some other reason to need a strong PC, it just doesn’t seem worth the investment.

At least Intel are trying to keep their prices low. Until they either catch on, in which case they’ll raise prices to match, or they fade out and leave everyone with unsupported hardware.

Actually AMD has said they’re ditching their high end options and will also focus on budget and midrange cards. AMD has also promised better raytracing performance (compared to their older cards) so I don’t think it will be the new norm if AMD also prices their cards competitively to Intel. The high end cards will be overpriced as it seems like the target audience doesn’t care that they’re paying shitton of money. But budget and midrange options might slip away from Nvidia and get cheaper, especially if the upscaler crutch breaks and devs have to start doing actual optimizations for their games.

Actually AMD has said they’re ditching their high end options

Which means there’s no more competition in the high-end range. AMD was lagging behind Nvidia in terms of pure performance, but the price/performance ratio was better. Now they’ve given up a segment of the market, and consumers lose out in the process.

the high end crowd showed there’s no price competition, there’s only performance competition and they’re willing to pay whatever to get the latest and greatest. Nvidia isn’t putting a 2k pricetag on the top of the line card because it’s worth that much, they’re putting that pricetag because they know the high end crowd will buy it anyway. The high end crowd has caused this situation.

You call that a loss for the consumers, I’d say it’s a positive. The high end cards make up like 15% (and I’m probably being generous here) of the market. AMD dropping the high and focusing on mid-range and budget cards which is much more beneficial for most users. Budget and mid-range cards make up the majority of the PC users. If the mid-range and budget cards are affordable that’s much more worthwhile to most people than having high end cards “affordable”.

But they’ve been selling mid-range and budget GPUs all this time. They’re not adding to the existing competition there, because they already have a share of that market. What they’re doing is pulling out of a segment where there was (a bit of) competition, leaving a monopoly behind. If they do that, we can only hope that Intel puts out high-end GPUs to compete in that market, otherwise it’s Nvidia or nothing.

Nvidia already had the biggest share of the high-end market, but now they’re the only player.

It’s already Nvidia or nothing. There’s no point fighting with Nvidia in the high end corner because unless you can beat Nvidia in performance there’s no winning with the high end cards. People who buy high end cards don’t care about a slightly worse and slightly cheaper card because they’ve already chosen to pay premium price for premium product. They want the best performance, not the best bang for the buck. The people who want the most bang for the buck at the high end are a minority of a minority.

But on the other hand, by dropping high end cards AMD can focus more on making their budget and mid-range cards better instead of diverting some of their focus on the high end cards that won’t sell anyway. It increases competition in the budget and mid-range section and mid-range absolutely needs stronger competition from AMD because Nvidia is slowly killing mid-range cards as well.

TIL, I’m a minority of a minority.

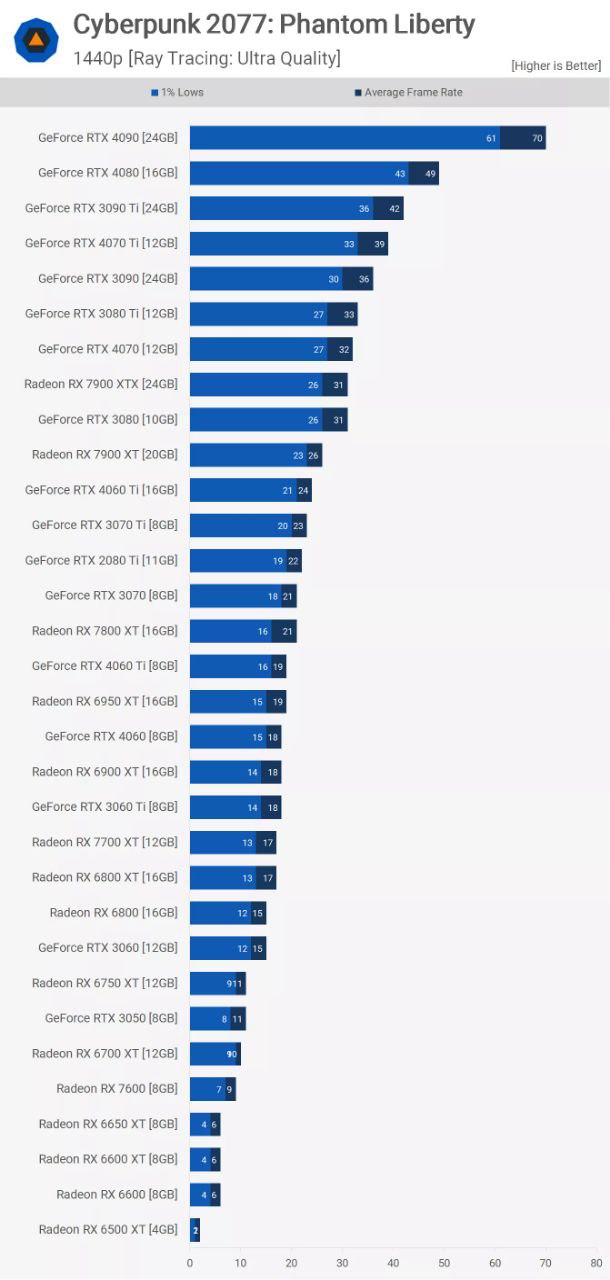

Overclocked a $800 AMD 7900XTX to 3.4 GHz core with +15% overvolt (1.35V), total power draw of 470W @86°C hotspot temp under 100% fan duty cycle.

Matches the 3DMark score in Time Spy for an RTX 4090D almost to the number.

63 FPS @ 1440p Ray Tracing: Ultra (Path Tracing On) in CP2077

As always, buying a used previous gen flagship is the best value.

They’ll sell out anyways due to lack of good competition. Intel is getting there but still have driver issues, AMD didn’t announce their GPU prices yet but their entire strategy is following Nvidia and lowering the price by 10% or something.

AMD is the competition.

AMD hasn’t been truly competitive with nVidia in quite a long time.

AMD has been taking over market share slowly but surely. And the console gaming market… and the portable gamimg market… and the chips out perform intel chips over and over. But ya sure.

I don’t dispute that AMD is eating Intel’s lunch, but performance-wise, AMD has nothing for nVidia. And that’s what the discussion is about, performance. AMD simply doesn’t hold a candle.

Weird completely unrelated question. Do you have any idea why you write “Anyway” as “Anyways”?

It’s not just you, it’s a lot of people, but unlike most grammar/word modifications it doesn’t really make sense to me. Most of the time the modification shortens the word in some way rather than lengthening it. I could be wrong, but I don’t remember people writing or saying “anyway” with an added “s” in anyway but ironically 10-15 years ago, and I’m curious where it may be coming from.

I also write anyways that way, and so does everyone I know, I think it’s a regional thing

I guess I’m used to saying it since I spent a long time not knowing it’s the wrong pronunciation for it.

Interesting. Thanks.

Don’t pick on the parseltongue.

https://grammarist.com/usage/anyways/

Although considered informal, anyways is not wrong. In fact, there is much precedent in English for the adverbial -s suffix, which was common in Old and Middle English and survives today in words such as towards, once, always, and unawares. But while these words survive from a period of English in which the adverbial -s was common, anyways is a modern construction (though it is now several centuries old).

Schrödinger’s word. Both new and old, lol

So much of nvidia’s revenue is now datacenters, I wonder if they even care about consumer sales. Like their consumer level cards are more of an advertising afterthought than actual products.

You have to keep inflation in mind. 550 would be 450 2019 dollars.

Yeah, I keep forgetting how much time has passed.

Bought my first GPU, an R9 Fury X, for MSRP when it launched. The R9 300 series and GTX 900 series seemed fairly priced then (aside from the Titan X). Bought another for Crossfire and mining, holding on until I upgraded to a 7800 XT.

Comparing prices, all but the 5090 are within $150 of each other when accounting for inflation. The 5090 is stupid expensive. A $150 increase in price over a 10-year period probably isn’t that bad.

I’m still gonna complain about it and embrace my inner “old man yells at prices” though.

Don’t forget to mention the huge wattage.

More performance for me is more identical fps at the same amount of power.

By rendering only 25% of the frames we made DLSS4 100% faster than DLSS3. Which only renders 50% of the frames! - NVIDIA unironically

You living in the past, rendering 100% of the frames is called Brute Force Rendering, that’s for losers.

With only 2k trump coins our new graphic card can run Cyberpunk 2077, a game from 4 years ago, at 30 fps with RTX ON but you see with DLSS and all the other

crapmagic we can run at 280 FPS!!! Everything is blurry and ugly as fuck but look at the numbers!!!

They’re not even pretending to be affordable any more.

Nvidia is just doing what every monopoly does, and AMD is just playing into it like they did on CPUs with Intel. They’ll keep competing for price performance for a few years then drop something that drops them back on top (or at least near it).

Buy 800 of those or buy a house. Pick.

I can’t do either of those. I chose Option C, a hospital visit.

Look at money bags over here. He can go to the HoSpItAl.

Unfortunately, that’s the anti-scalper countermeasure. Crippling their crypto mining potential didn’t impact scalping very much, so they increased the price with the RTX 40 series. The RTX 40s were much easier to find than the RTX 30s were, so here we are for the RTX 50s. They’re already on the edge of what people will pay, so they’re less attractive to scalpers. We’ll probably see an initial wave of scalped 3090s for $3500-$4000, then it will drop off after a few months and the market will mostly have un-scalped ones with fancy coolers for $2200-$2500 from Zotac, MSI, Gigabyte, etc.

The switch from proof of work to proof of stake in ETH right before the 40 series launch was the primary driver of the increased availability.

The existence of scalpers means demand exceeds supply. Pricing them this high is a countermeasures against scalpers…in that Nvidia wants to make the money that scalpers would have made .

Not really a countermeasure, but the scalping certainly proved that there is a lot of people willing to buy their stuff at high prices.

No, it’s a direct result of observing the market during those periods and seeing the lemmings beating down doors to pay 600-1000 dollars over MSRP. They realized the market is stupid and will bear the extra cost.

This is absolutely 3dfx level of screwing over consumers and all about just faking frames to get their “performance”.

deleted by creator

Welcome to the future

What if I’m buying a graphics card to run Flux or an LLM locally. Aren’t these cards good for those use cases?

deleted by creator

Except you cannot use them for AI commercially, or at least in data center setting.

deleted by creator

“T-BUFFER! MOTION BLUR! External power supplies! Wait, why isn’t anyone buying this?”

LOL, their demo shows Cyberpunk running at a mere 27fps on the 5090 with DLSS off. Is that supposed to sell me on this product?

Their whole gaming business model now is encouraging devs to stick features that have no hope of rendering quickly in order to sell this new frame generation rubbish.

The 4090 gets like sub 20fps without DLSS and stuff. Seems like a good improvement.

Barely

50% improvement not enough for you?

Whether it’s 50% or 200% it’s pointless if the avg FPS can’t even reach the bare minimum of 30.

What does that have to do with anything?

Not every game has frame gen… not everybody wanna introduce lag to input. So 50% is 100% sketchy marketing. You can keep your 7 frames, Imma wait for 6090

Both figures are without DLSS, FG, whatever. Just native 4k with Path Tracing enabled, that’s why it’s so low.

The sketchy marketing is comparing a 5070 with a 4090, but that’s not what this is about.

Like I said, I base performance without frame gen. 5090 is not twice as powerful as a 4090, which they advertise, without frame gen.

I’ll just keep buying AMD thanks.

Okay losers, time for you to spend obscene amounts to do your part in funding the terrible shit company nvidia.

Okay loser, so what GPU should we buy then?

A used 2080.

That would be a downgrade for me. And you know, same company…

“Used” is not “same company” because you’re not buying from said company.

You still gotta use their shitty NVIDIA experience app (bloated with ads that make NVIDIA money when you open it), and you buying a used NVIDIA card increases demand (and thus prices) on all NVIDIA cards.

If you are a gamer and not doing AI stuff then buying a non-NVIDIA card is entirely an option.

GeForce Experience app is dead thank goodness with no account or sign in required anymore.

Good fucking riddance

NVCleanInstall is our friend :)

Market share is not as simple as measuring directly sold products.

And if you buy it used, nVidia doesn’t get any of that money.

Sure, if your understanding of economy is based off of a lemonade stand a dollar a cup…

If I buy a used card from an individual, nVidia doesn’t get a penny. How is that complicated?

Ok…they do. They get increased market share, which is measurable and valuable to shareholders, increasing stock value and increasing company liquidity.

Way better investment to buy their stock rather than one of their GPUs, IMO.

I’m sure these will be great options in 5 years when the dust finally settles on the scalper market and they’re about to roll out RTX 6xxx.

Scalpers were basically non existent in the 4xxx series. They’re not some boogieman that always raises prices. They work under certain market conditions, conditions which don’t currently exist in the GPU space, and there’s no particular reason to think this generation will be much different than the last.

Maybe on the initial release, but not for long after.

The 4090 basically never went for MSRP until Q4 2024… and now it’s OOS everywhere.

nobody scalped the 4080 because it was shit price/perf. 75% of the price of a 4090 too… so why not just pay the extra 25% and get the best?

the 4070ti (aka base 4080) was too pricey to scalp given that once you start cranking up the price then why not pay the scalper fee for a 4090.

Things below that are not scalp worthy.

The 4090 basically never went for MSRP until Q4 2024

This had nothing to do with scalpers though. Just pure corporate greed.

I’m not so sure. Companies were definitely buying many up, but they typically stick to business purchasing channels like CDW/Dell/HP etc.

Consumer boxed cards sold by retailers might have went to some small businesses/startups and independent developers but largely they were picked up by scalpers or gamers.

I work in IT and have never went to a store to buy a video card unless it was an emergency need to get a system functional again. It’s vastly preferred to buy things through a VAR where warranties and support are much more robust than consumer channels.

Scalpers were basically non existent in the 4xxx series.

Bull fucking shit. I was trying to buy a 4090 for like a year. Couldn’t find anything even approaching retail. Most were $2.3k+.

My last new graphics card was a 1080, I‘ve bought second hand since then and will keep doing that cause these prices are…

I’m still using that 1080Ti and currently see no reason to upgrade.

FF VII rebirth will require an RTX 20

but I think that’s down to lack of optimization

Rebirth also says it requires 12 gigs of vram, my 3080 is crying.

Still using a 1080 Ti and I definitely have lots of reasons to upgrade, but I’m not willing to spread the cheeks that wide.

Two problems, they are big ones:

- The hardware is expensive for a marginal improvement

- The games coming out that best leverage the features like Ray tracing are also expensive and not good

Nvidia claims the 5070 will give 4090 performance. That’s a huge generation uplift if it’s true. Of course, we’ll have to wait for independent benchmarks to confirm that.

The best ray tracing games I’ve seen are applying it to older games, like Quake II or Minecraft.

I expect they tell us it can achieve that because under the hood DLSS4 gives it more performance if enabled.

But is that a fair comparison?

They’ve already said it’s all because of DLSS 4. The 5070 needs the new 4x FG to match the 4090, although I don’t know if the 4090 has the “old” 2x FG enabled, probably not.

The far cry benchmark is the most telling. Looks like it’s around a 15% uplift based on that.

About two months ago I upgraded from 3090 to 4090. On my 1440p I basically couldn’t tell. I play mostly MMOs and ARPGs.

Shouldn’t have upgraded then….

Those genres aren’t really known for having brutal performance requirements. You have to play the bleeding edge stuff that adds prototype graphics postprocessing in their ultra or optional settings.

When you compare non RT performance the frame delta is tiny. When you compare RT it’s a lot bigger. I think most of the RT implementations are very flawed today and that it’s largely snake oil so far, but some people are obsessed.

I will say you can probably undervolt / underclock / power throttle that 4090 and get great frames per watt.

I think it’s going to be a long time before I upgrade my graphics card with these prices.

More Shenanigans. Moooore !

Nvidia Core i5.